Nonlinear Human-AI Interaction Design Tool

︎Research Paper

︎Human-AI Interaction

︎AI Creative Support Tool (CST)

︎Design Process

Developing alternate workflow paradigms with generative AI tools:

Creative 3D Modeling Through Human-AI Co-creative Interaction:

An Intermittent, Incremental, and Non-Linear Workflow Framework

Co-authors: Jingyi Wang, Jianing Nomy Yu, Jose Luis Garcia del Castillo y Lopez

Status: Presented at ACADIA conference 2025

The creative processes of digital artists and designers are often exploratory, iterative, and open-ended, presenting a misalignment with existing generative AI tools which predominantly operate through linear input-to-output paradigms.

This research project and paper presents the design and evaluation of a prototype generative AI creative support tool (CST) aimed at fostering incremental and selective interactions, enabling artists to maintain authorship while leveraging AI for inspiration.

Through user testing with 3D modellers in architecture and design, we observed that the tool supported non-linear workflows by introducing novel ideas, encouraging non-linear re-evaluation of designs, and reinforcing a sense of artistic authorship over the output. However, participants also faced challenges, including difficulties integrating AI outputs into their workflows and moments of creative friction when outputs diverged from their intentions.

This work contributes to ongoing efforts in reimagining generative AI systems used by artists and designers, and proposes an alternate workflow framework to support non-linear, iterative, and discursive creative processes.

Creative 3D Modeling Through Human-AI Co-creative Interaction:

An Intermittent, Incremental, and Non-Linear Workflow Framework

Co-authors: Jingyi Wang, Jianing Nomy Yu, Jose Luis Garcia del Castillo y Lopez

Status: Presented at ACADIA conference 2025

︎︎︎ Download Paper

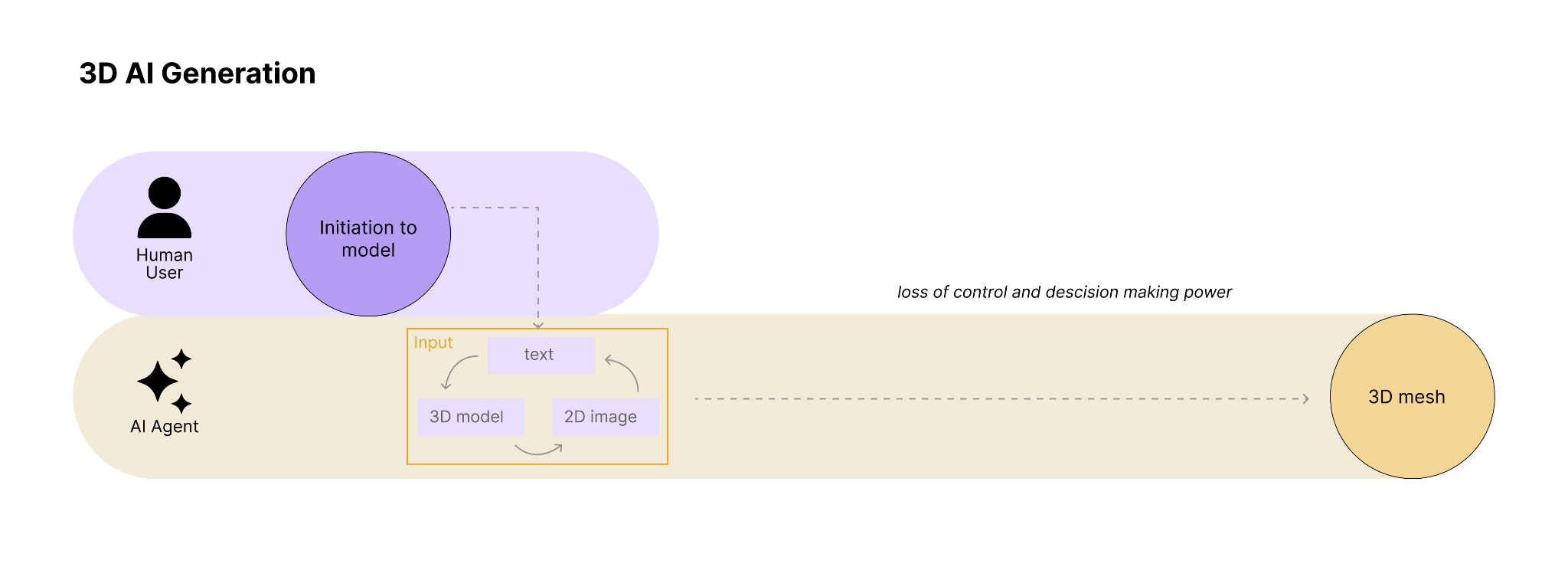

The creative processes of digital artists and designers are often exploratory, iterative, and open-ended, presenting a misalignment with existing generative AI tools which predominantly operate through linear input-to-output paradigms.

This research project and paper presents the design and evaluation of a prototype generative AI creative support tool (CST) aimed at fostering incremental and selective interactions, enabling artists to maintain authorship while leveraging AI for inspiration.

Through user testing with 3D modellers in architecture and design, we observed that the tool supported non-linear workflows by introducing novel ideas, encouraging non-linear re-evaluation of designs, and reinforcing a sense of artistic authorship over the output. However, participants also faced challenges, including difficulties integrating AI outputs into their workflows and moments of creative friction when outputs diverged from their intentions.

This work contributes to ongoing efforts in reimagining generative AI systems used by artists and designers, and proposes an alternate workflow framework to support non-linear, iterative, and discursive creative processes.

Excerpt:

Recent advancements in generative AI have led to increasingly sophisticated outputs in both 2D and 3D media. Notable innovations in text-to-3D tools, such as those offered by Luma.ai, Tripo, and Hyperhuman, demonstrate significant strides in creating high-fidelity 3D meshes and point clouds from minimal input. However, the directness of their input-output workflows often results in outputs that feel overly "finished," leaving little room for artist-driven exploration or refinement. This output-centric approach creates challenges for artists and designers striving to assert their stylistic identity and creative agenda. This can be analogous to the situation of when designers collaborate with a client and present a photorealistic rendering, the client can feel unable to suggest further development because of the perceived completeness of the work. This can inhibit meaningful dialogue about potential iterative refinement. Instead, when the artist presents rough sketches or preliminary drafts, the unfinished appearance can invite collaboration, allowing for more engaged input from the client. With generative AI tool, the artist has become the client receiving the rendered images, feeling unable to impact the output.

Such dynamics have triggered concerns within the discipline about the potential erosion of human authorship in human-AI creative workflows. As Ziv Epstein, et al, articulate in their work on creativity and generative AI, this has been framed as a question of achieving “meaningful human control” (MHC), where MHC is “achieved if human creators can creatively express themselves through the generative system, leading to an outcome that aligns with their intentions and carries their personal, expressive signature. Future work is needed to investigate in what ways generative systems and interfaces can be developed that allow more meaningful human control by adding input streams that provide users fine-grained causal manipulation over outputs”. Existing generative AI tools and their user interface design frequently lack the affordances necessary to support such control.

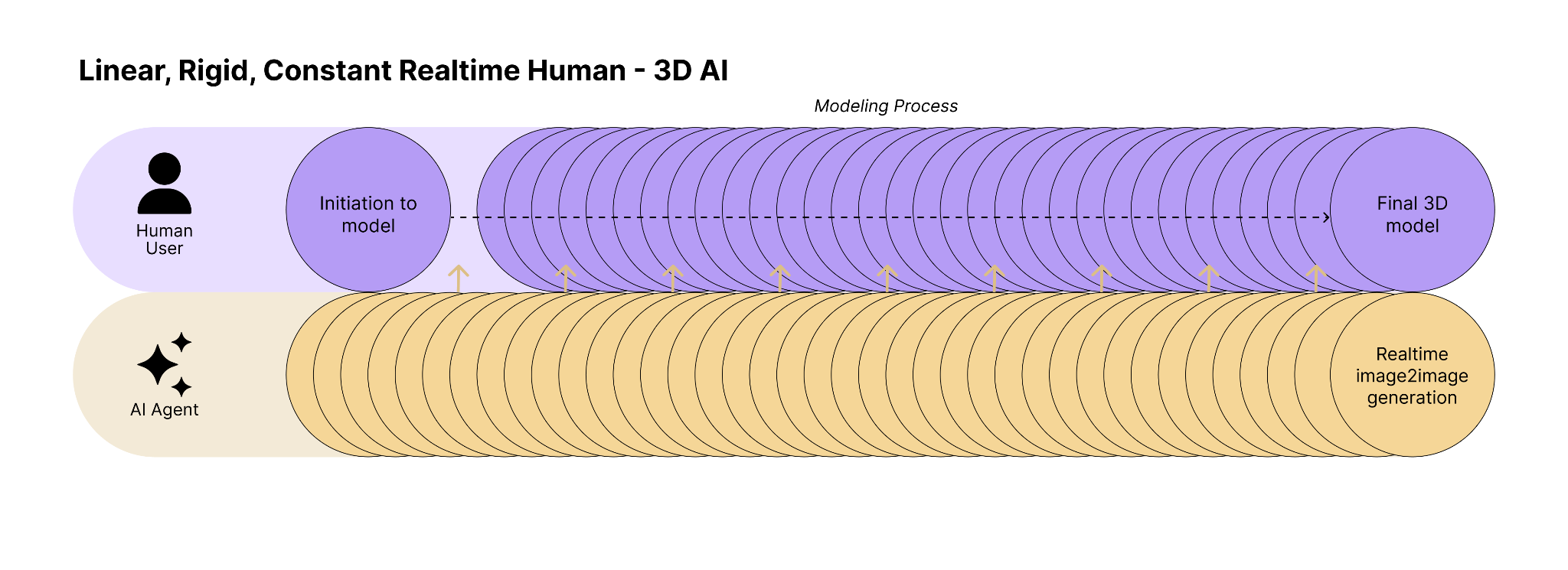

Three “workflow frameworks” of Human-AI Interaction design interfaces:

Type 1: Direct (Currently popular tools such as Midjourney, Stable Diffusion, and Luma fall under this workflow)

Type 2. Intermittent and Constant (Real-time feature of Krea.ai and the VR x AI Realtime Workflow sculpture project is an example of this workflow)

Type 3. Non-linear, Incremental, Intermittent

(This project proposes our Human-AI design tool to fall under this model, with the goal of foregrounding human agency and faciliating the non-linear, discursive nature of human design workflows.)

Specifically, this research includes

(1) the design and implementation of a proof-of-concept prototype that embodies these principles of incremental, intermittent, and non-linear human-AI interaction, offering a possible workflow case study of how generative AI tools can be integrated into the workflows of digital artists and designers; and,

(2) through user studies, we examine and analyze user interactions with this system, in order to generate insights into the affordances necessary for future generative AI systems to truly support meaningful human control and creative expression.

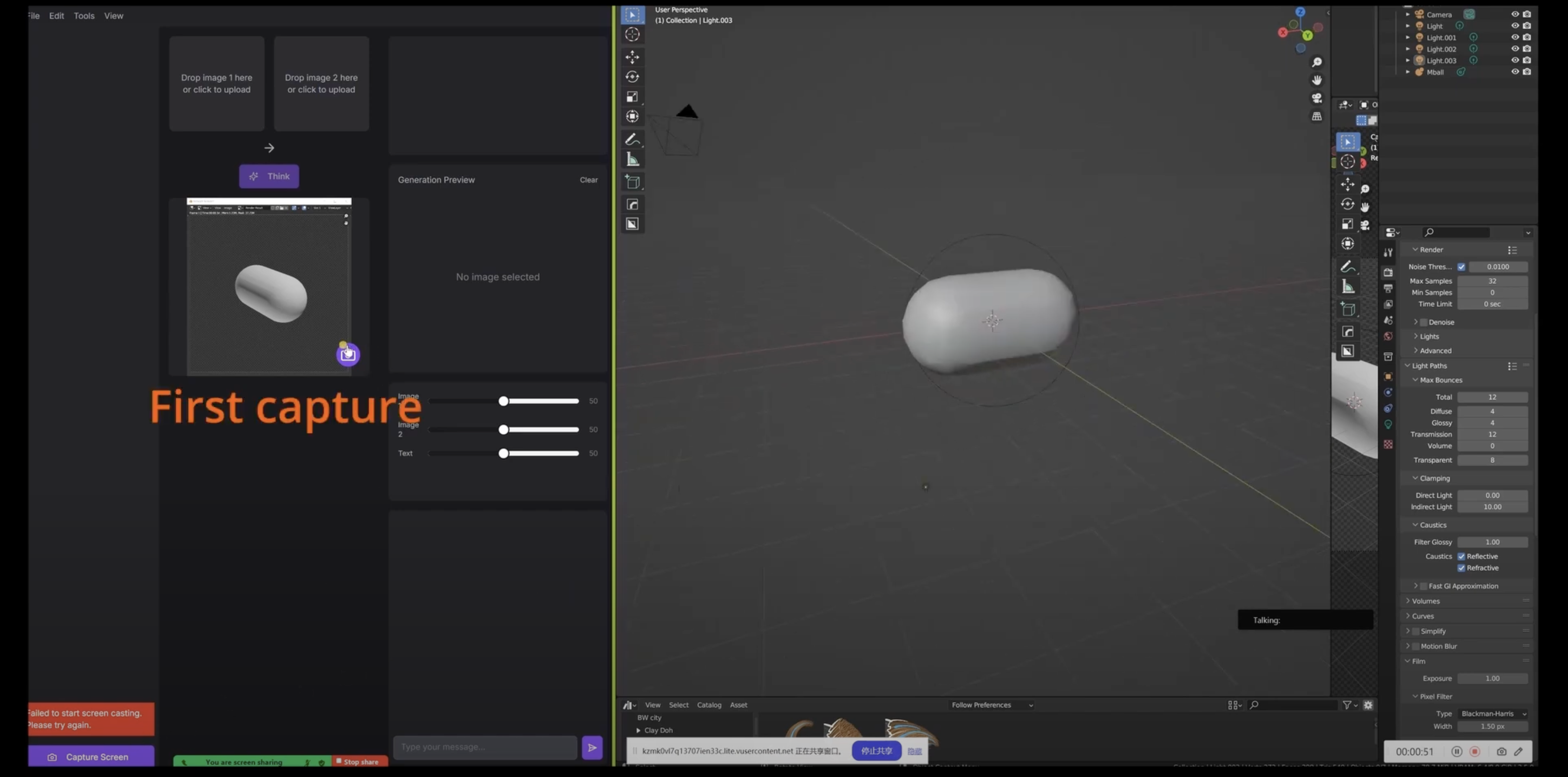

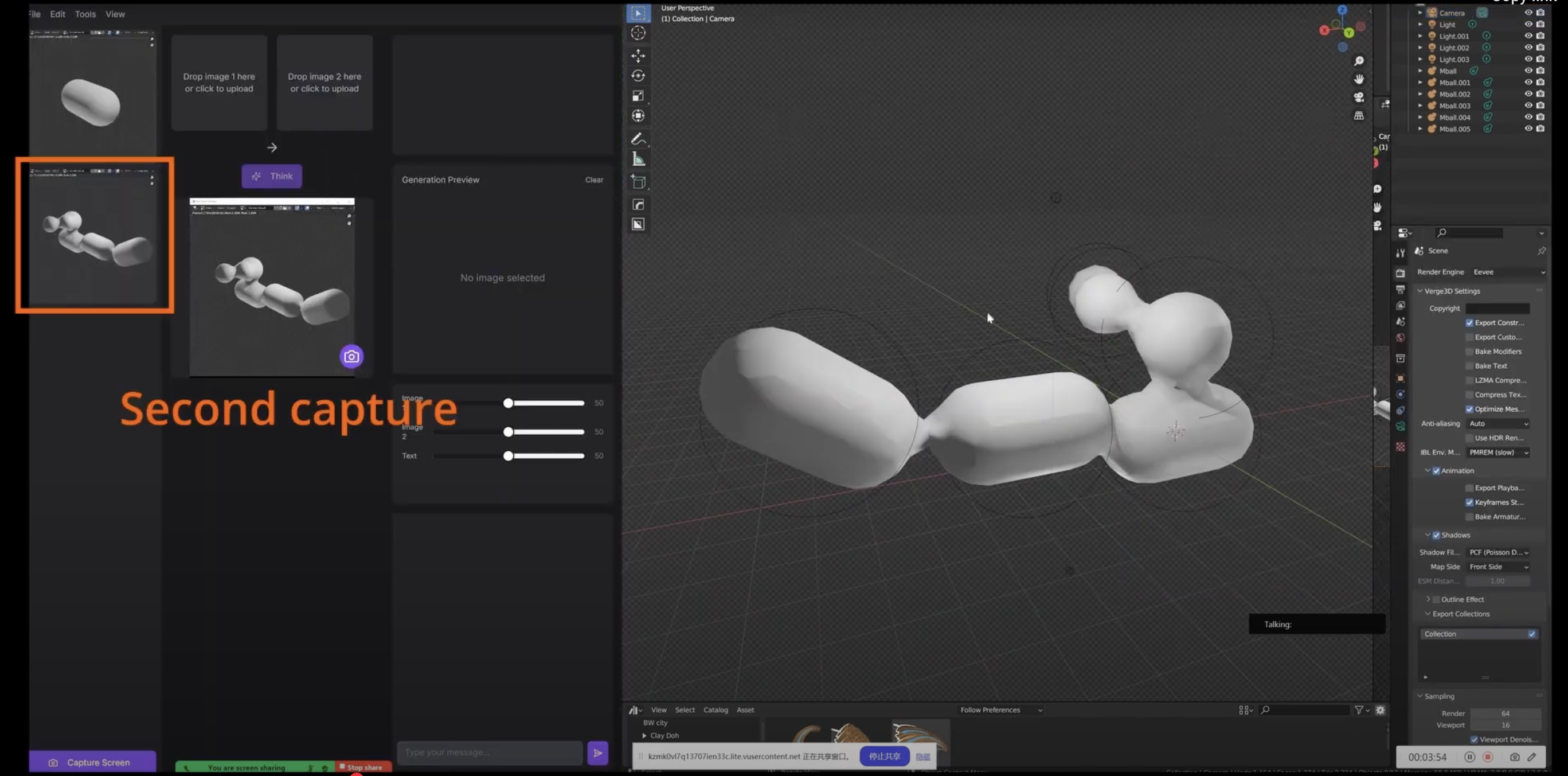

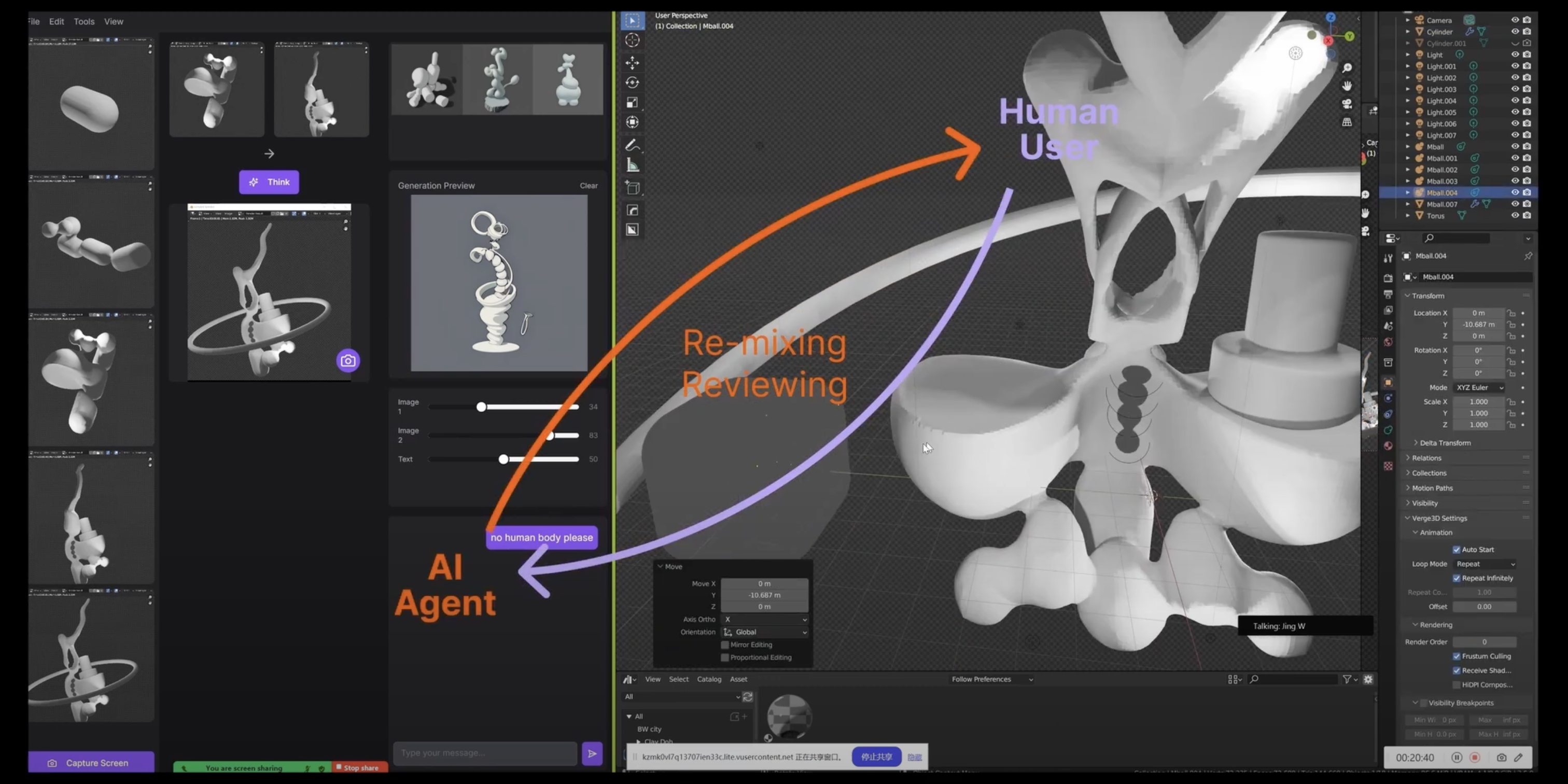

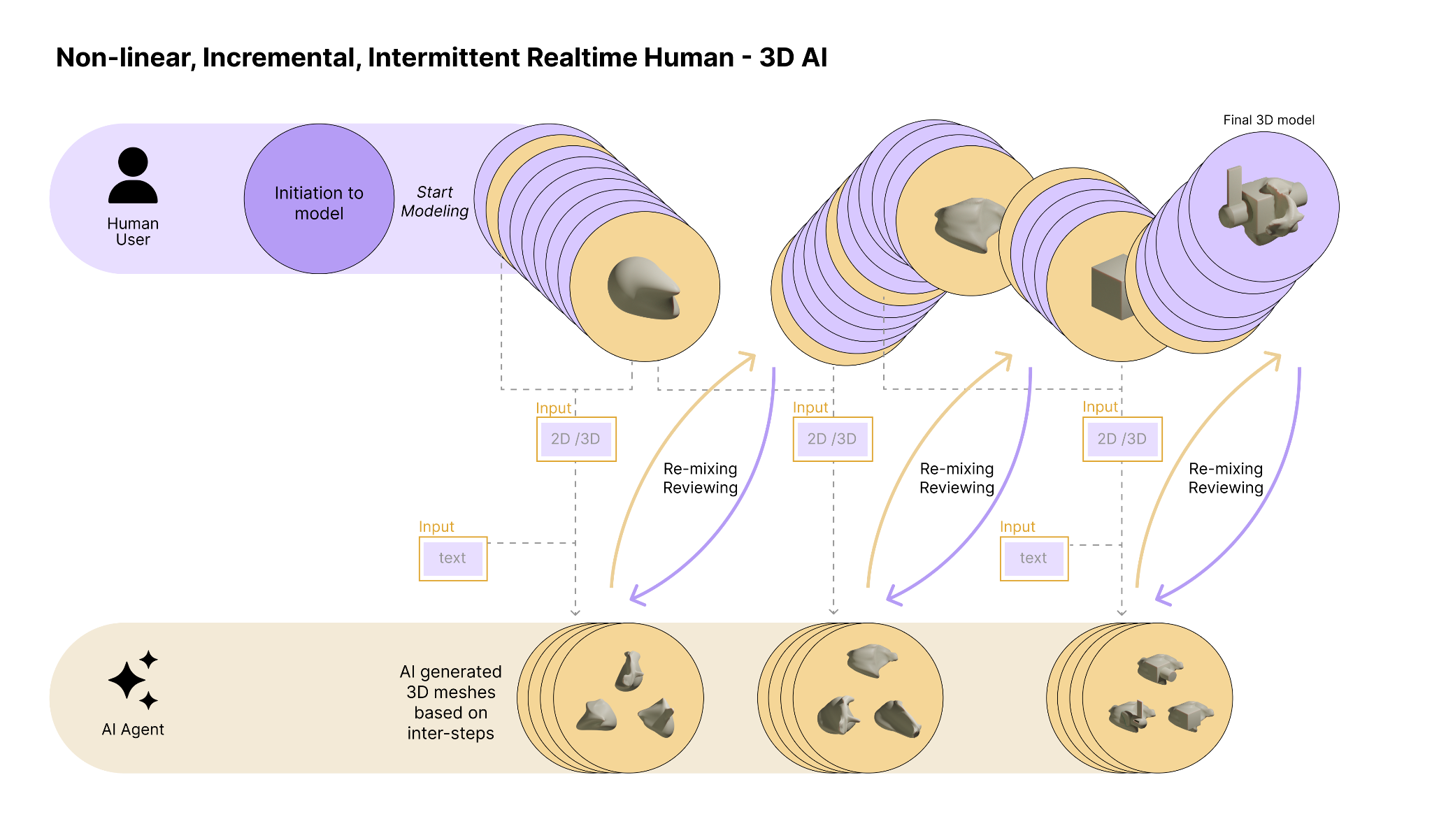

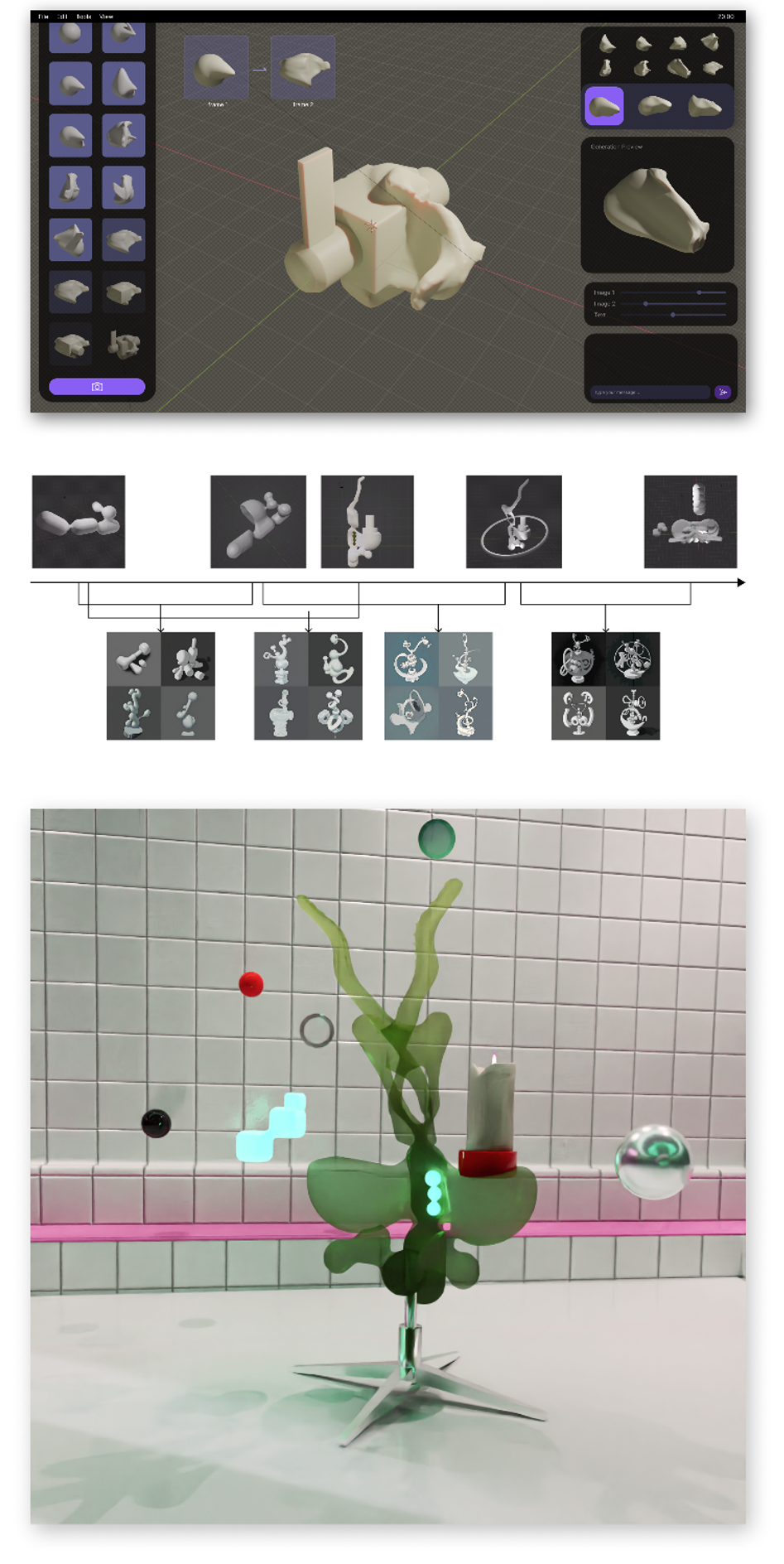

User Study example: Created by a participant through a 20-minute observation period, using Blender as their preferred digital modelling tool. The last image shows the rendered output.

Collaborators: Jingyi Wang, Jianing Nomy Yu, Jose Luis Garcia del Castillo y Lopez