Ghost in the Machine

︎Tangible Media

︎Embodied Cognition

︎Physical Computing

This box holds a “Ghost in the Machine”, a digital consciousness that lives inside the TV. She sometimes looks like she is trapped and trying to get out, sometimes bored, and sometimes curious.

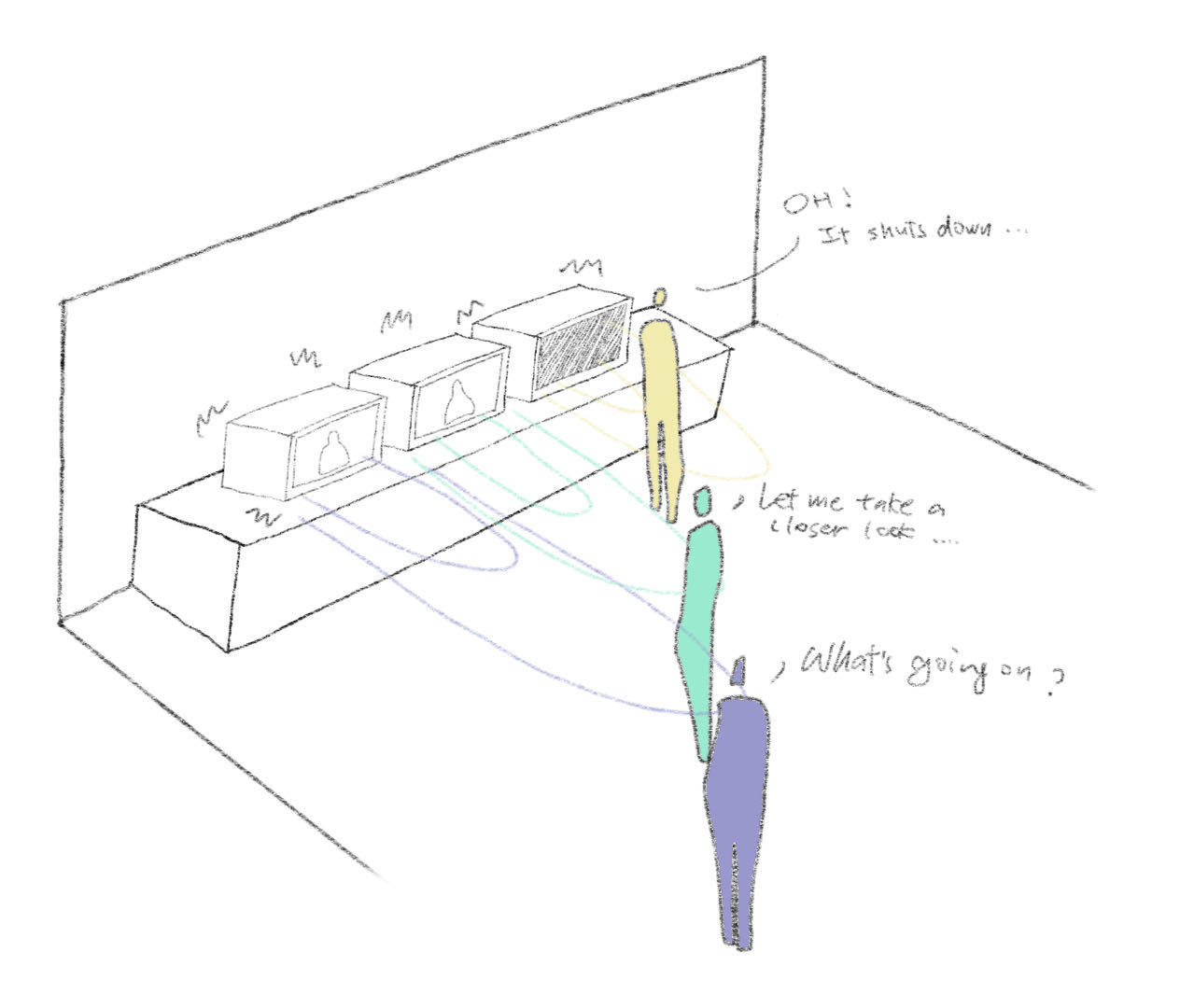

When a person comes into the same room as the box, the box’s consciousness makes noise to attract attention, but when the person comes close enough to peer into the box, it flickers the screen and then abruptly turns itself off. It doesn’t want to be seen anymore.

People often ascribe “human”-like qualities to computers, machines, and AI, anthropomorphizing them. We describe them as having personalities, “deciding” to do something, or “wanting” to do something. It can create playful moments, but also, anthropomorphizing machines and AI can be dangerous for several reasons.

Our project plays with this dilemma: When a machine is (explicitly or implicitly) designed to express emotions, how should we respond?

__________________________________________

- Nir Eisikovits, article in The Conversation

(Professor of Philosophy, UMass Boston)

When a person comes into the same room as the box, the box’s consciousness makes noise to attract attention, but when the person comes close enough to peer into the box, it flickers the screen and then abruptly turns itself off. It doesn’t want to be seen anymore.

People often ascribe “human”-like qualities to computers, machines, and AI, anthropomorphizing them. We describe them as having personalities, “deciding” to do something, or “wanting” to do something. It can create playful moments, but also, anthropomorphizing machines and AI can be dangerous for several reasons.

Our project plays with this dilemma: When a machine is (explicitly or implicitly) designed to express emotions, how should we respond?

__________________________________________

“AI Isn’t close to becoming sentient - the real danger lies in how easily we’re prone to anthropomorphize it”

- Nir Eisikovits, article in The Conversation

(Professor of Philosophy, UMass Boston)

Year: 2024

Collaborators: Jingyi Wang, Jianing Nomy Yu