A neural-network walk through fading memory

︎Gen AI / Neural Networks (NN)

︎3D scanning and photogrammetry

︎Oral Recounting

︎Urban Spatial Experience

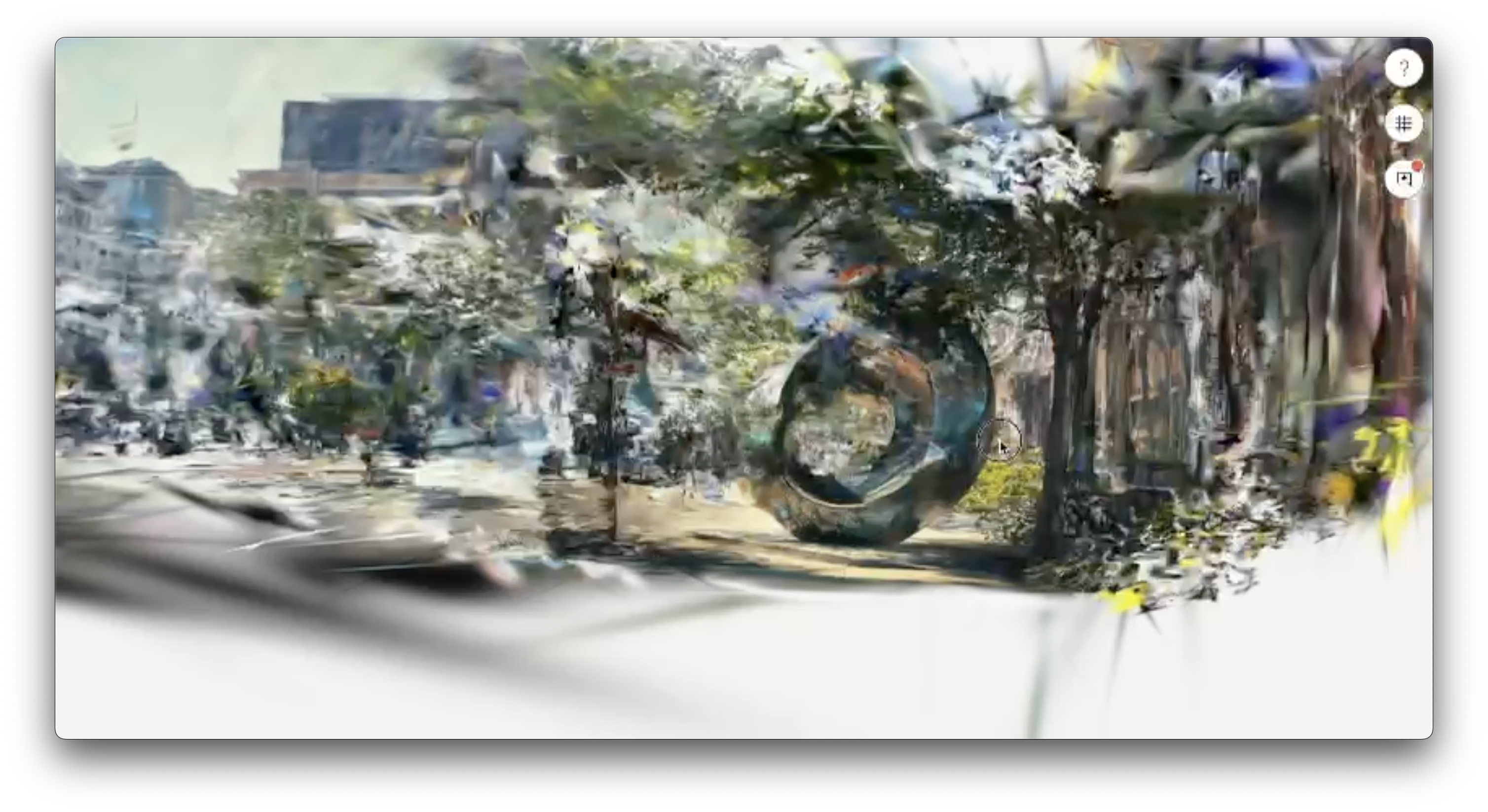

This is a video project that leverages 3D photogrammetry techniques and generative AI technologies to imagine how one might reconstruct oral stories of spatial experience, and in what ways these methods might display the biases of the technologies being used. In addition, this video project tries to capture the sensory, emotional, and experiential dimension of spatial memory, and the ways memory seem to dissipate the more we try to remember them. People’s spatial memory is heavily influenced by emotions. Rather than an accurate recollection, it is a collection of scattered vignettes.

Input: An interview transcript with Person A about his 30-minute walk around Cambridge, in which he was asked to remember what he saw during his walk.

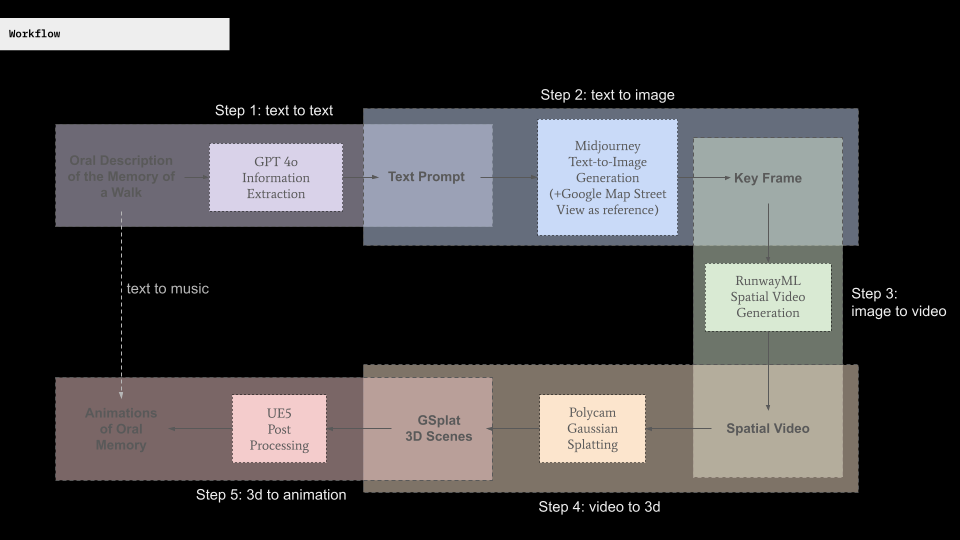

Tools: A daisy-chained series of neural networks (NN), of text to text, text-to-2D, 2D-to-video generative AI tools, and 3D Gaussian Splatting method for rendering point cloud

Context: This is part of a continuing research exploration in

Input: An interview transcript with Person A about his 30-minute walk around Cambridge, in which he was asked to remember what he saw during his walk.

Tools: A daisy-chained series of neural networks (NN), of text to text, text-to-2D, 2D-to-video generative AI tools, and 3D Gaussian Splatting method for rendering point cloud

Context: This is part of a continuing research exploration in

- Biometrically measuring urban navigation and wayfinding,

-

Nonlinear Human-AI design tool workflows: Instead of the typical model of using generative AI tools today, I am seeking out less "deterministic" methods of translation from 2D image to 3D form which allow more room for human design control, as well as more room for human interpretation of the AI outputs. A pointcloud (.ply) output suggests an opportunity for a designer to use this as a starting scaffold for the form, rather than the final 3D polysurface model.

interview quote: “there was a weird sculpture”

interview quote: “saw a couple smoking pot in a car… it had a strong smell”

interview quote: “a house that was blue, green, purple… odd”

interview quote: “I crossed the street so they don’t attack me”

interview quote: “when I saw Petsi Pies, I wanted to go in”

An interview transcript with Person A about his 30-minute walk around Cambridge, in which he was asked to remember what he saw during his walk:

A daisy-chained series of neural networks (NN), of text to text, text-to-2D, 2D-to-video generative AI tools, and 3D Gaussian Splatting method for rendering point cloud:

Year: 2024

Collaborators: Jingyi Wang, Jianing Nomy Yu