VR-AI Realtime Design Workflow

︎Design Process

︎AI x VR x Human

︎Computational Tools

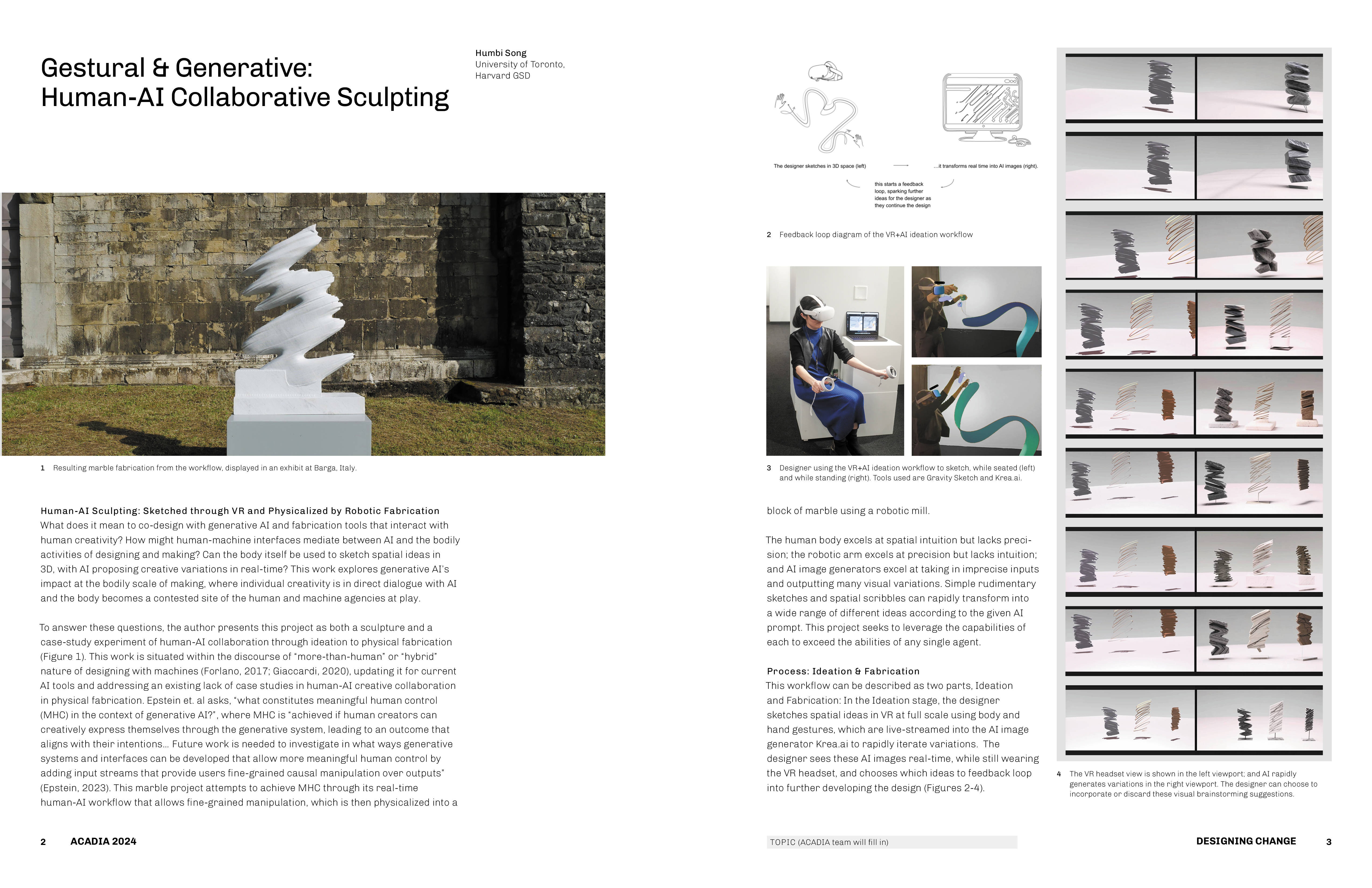

What does it mean to co-design with generative AI and fabrication tools that interact with human creativity? How might human-machine interfaces mediate between AI and the bodily activities of designing and making? Can the body itself be used to sketch spatial ideas in 3D, with AI proposing creative variations in real-time? This work explores generative AI’s impact at the bodily scale of making, where individual creativity is in direct dialogue with AI and the body becomes a contested site of the human and machine agencies at play.

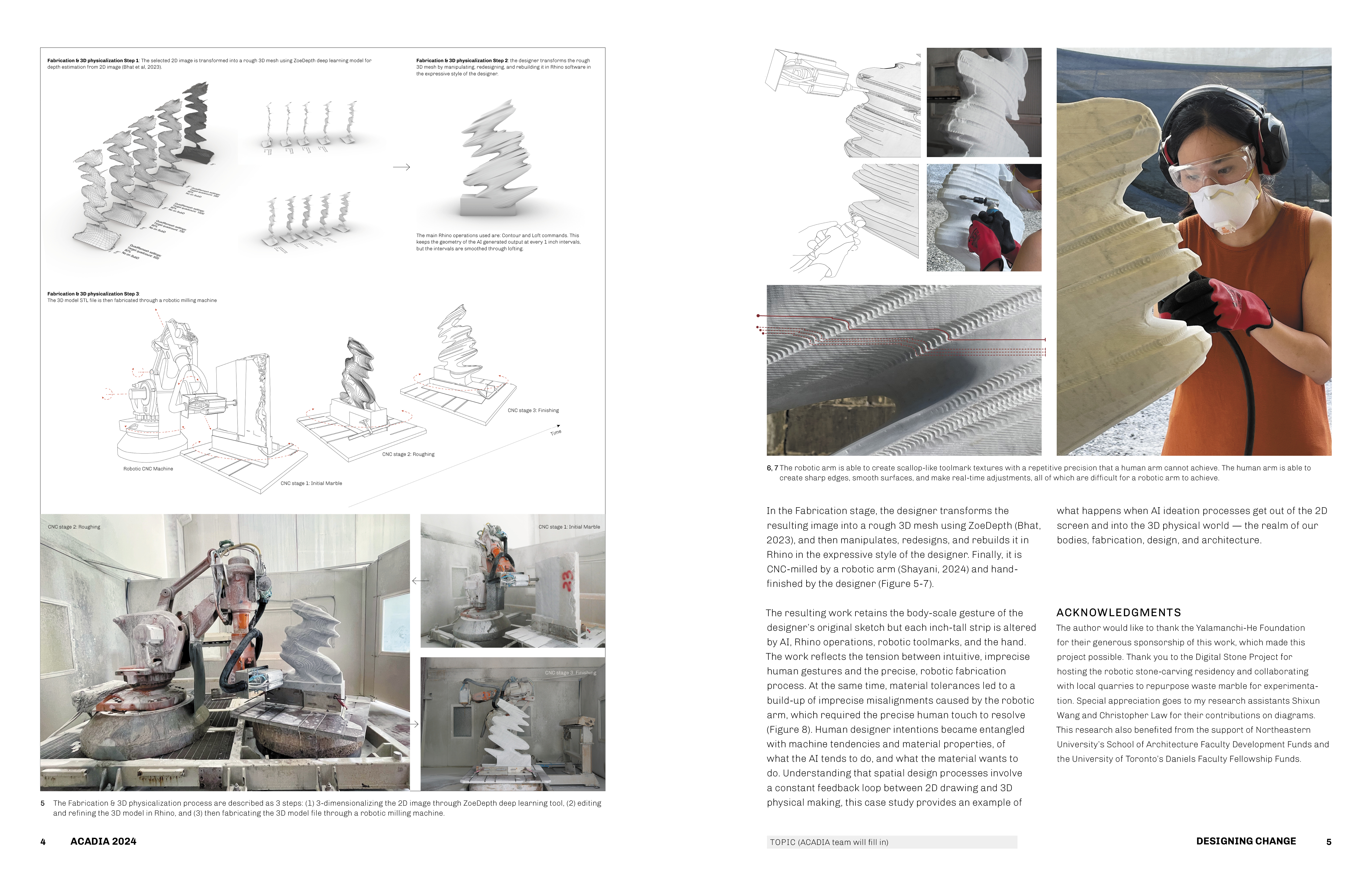

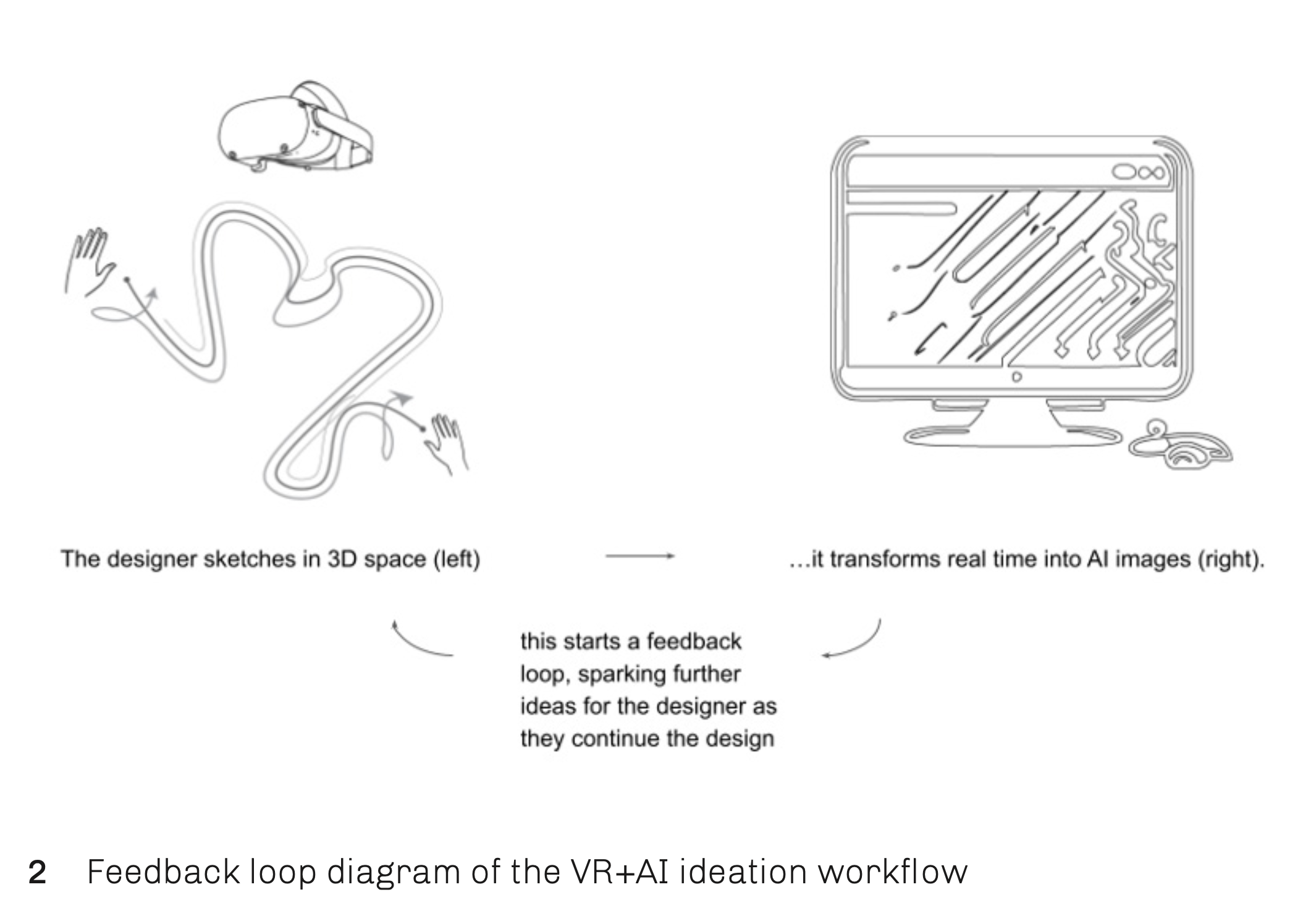

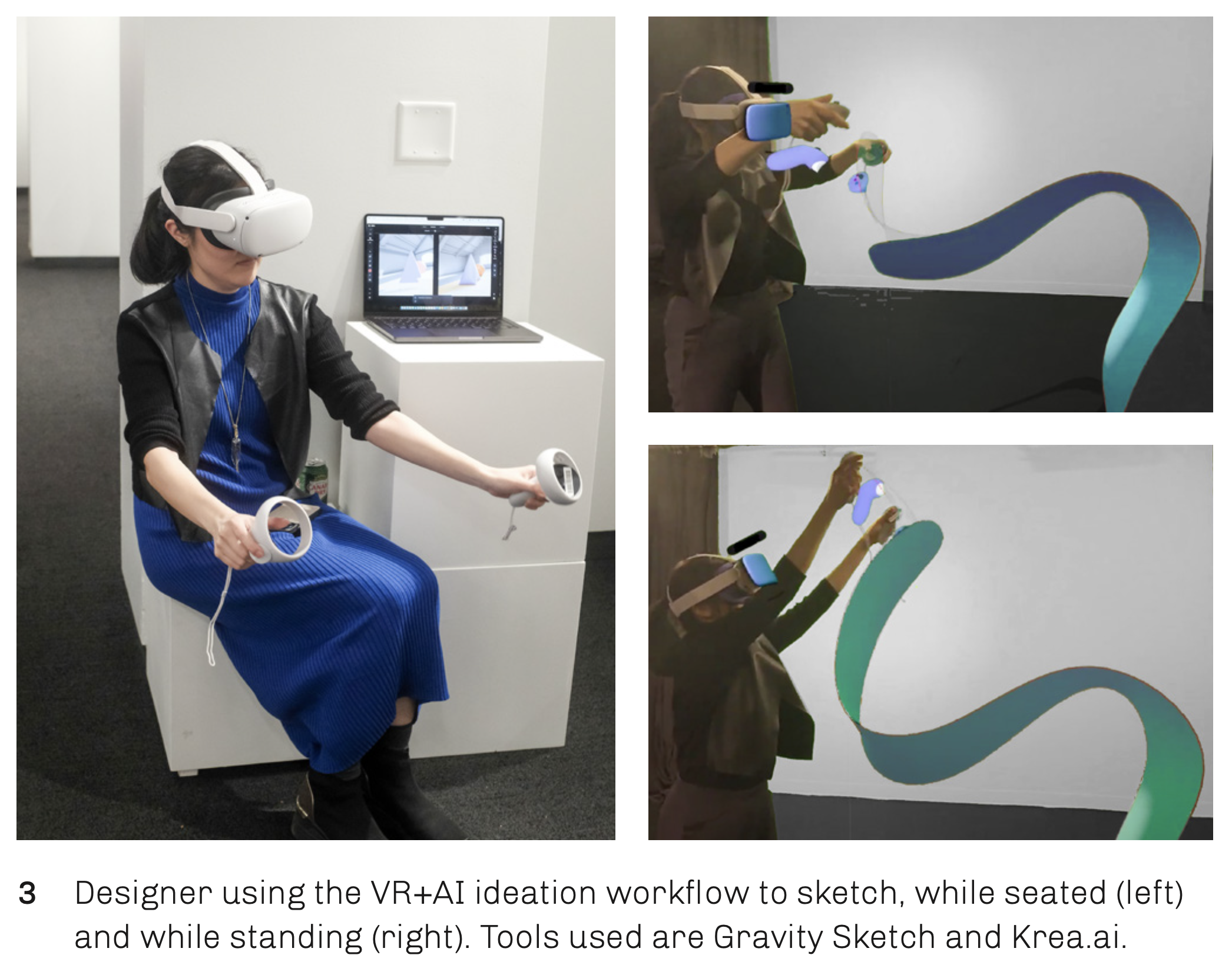

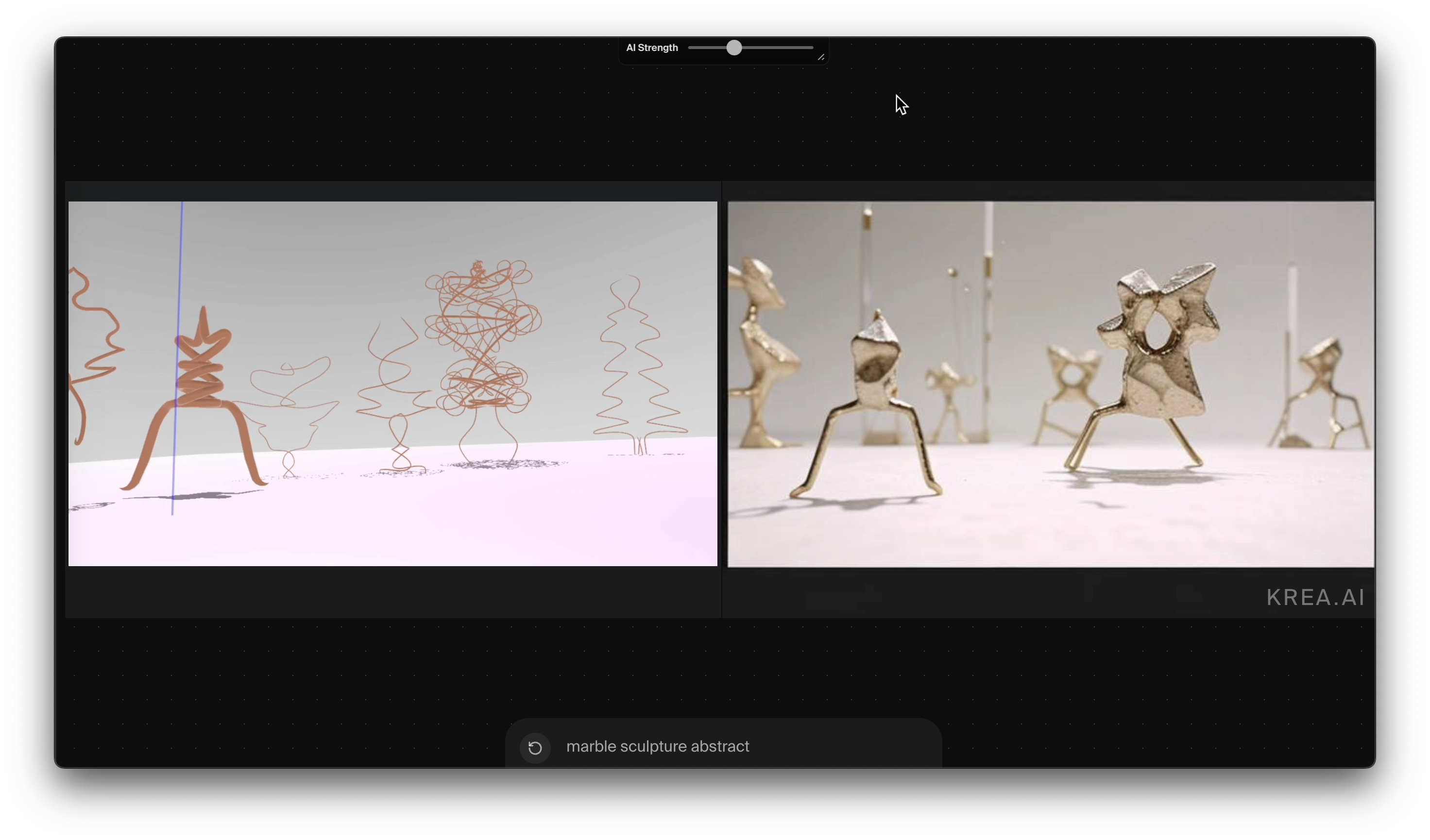

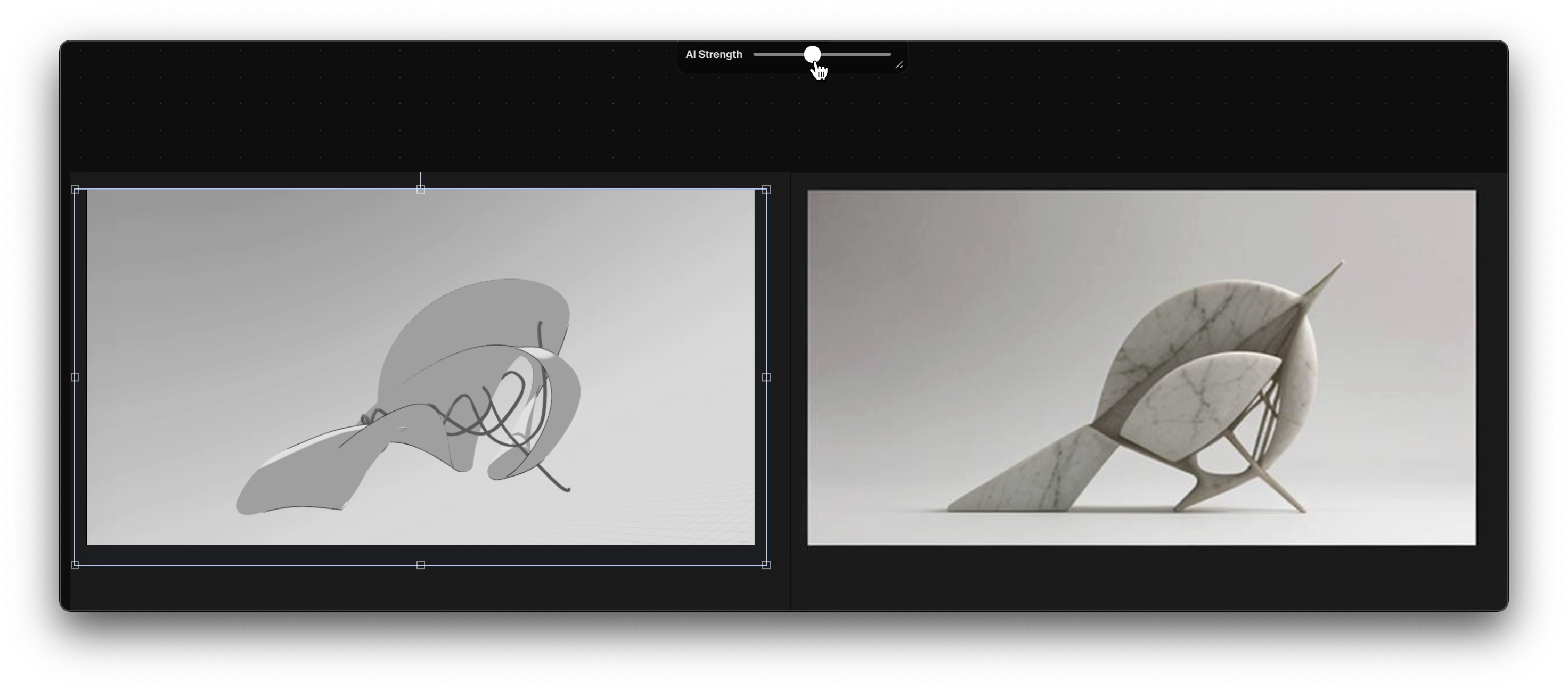

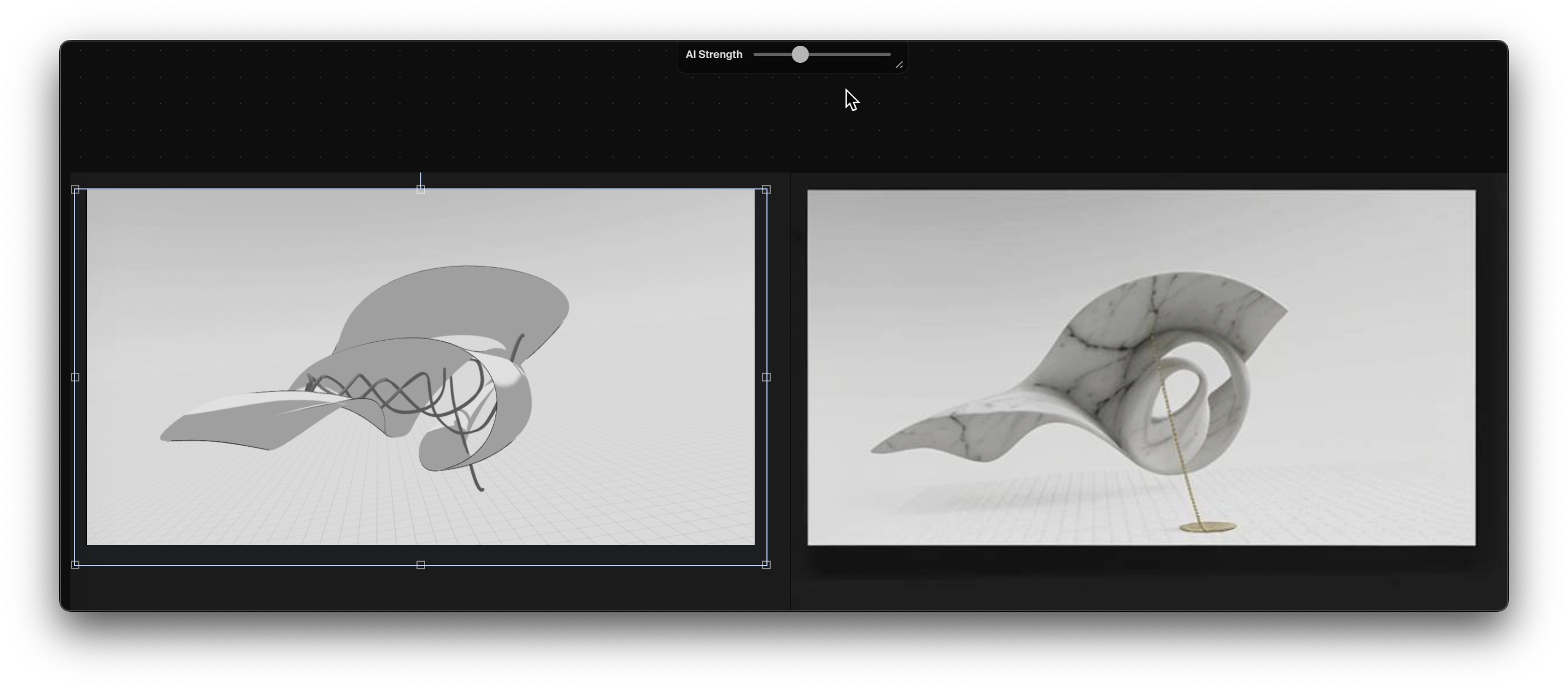

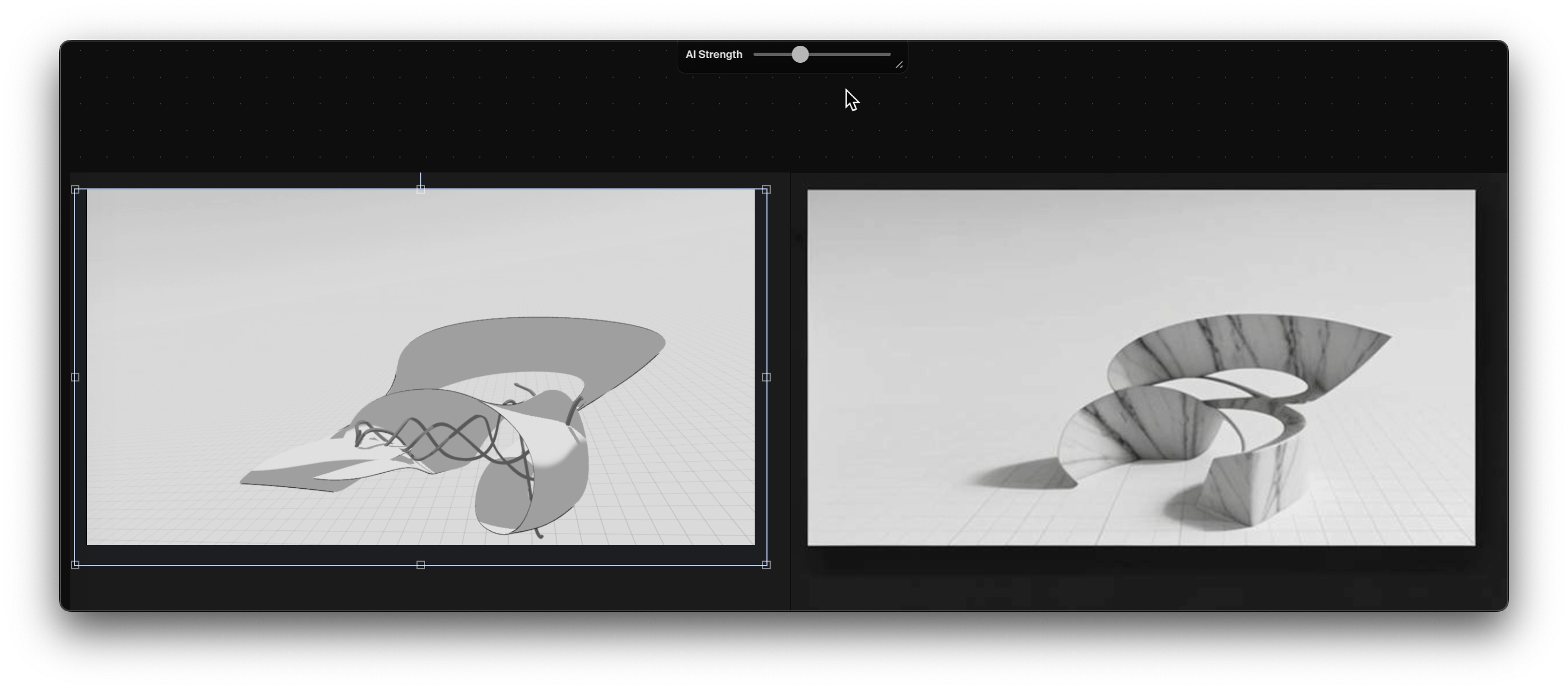

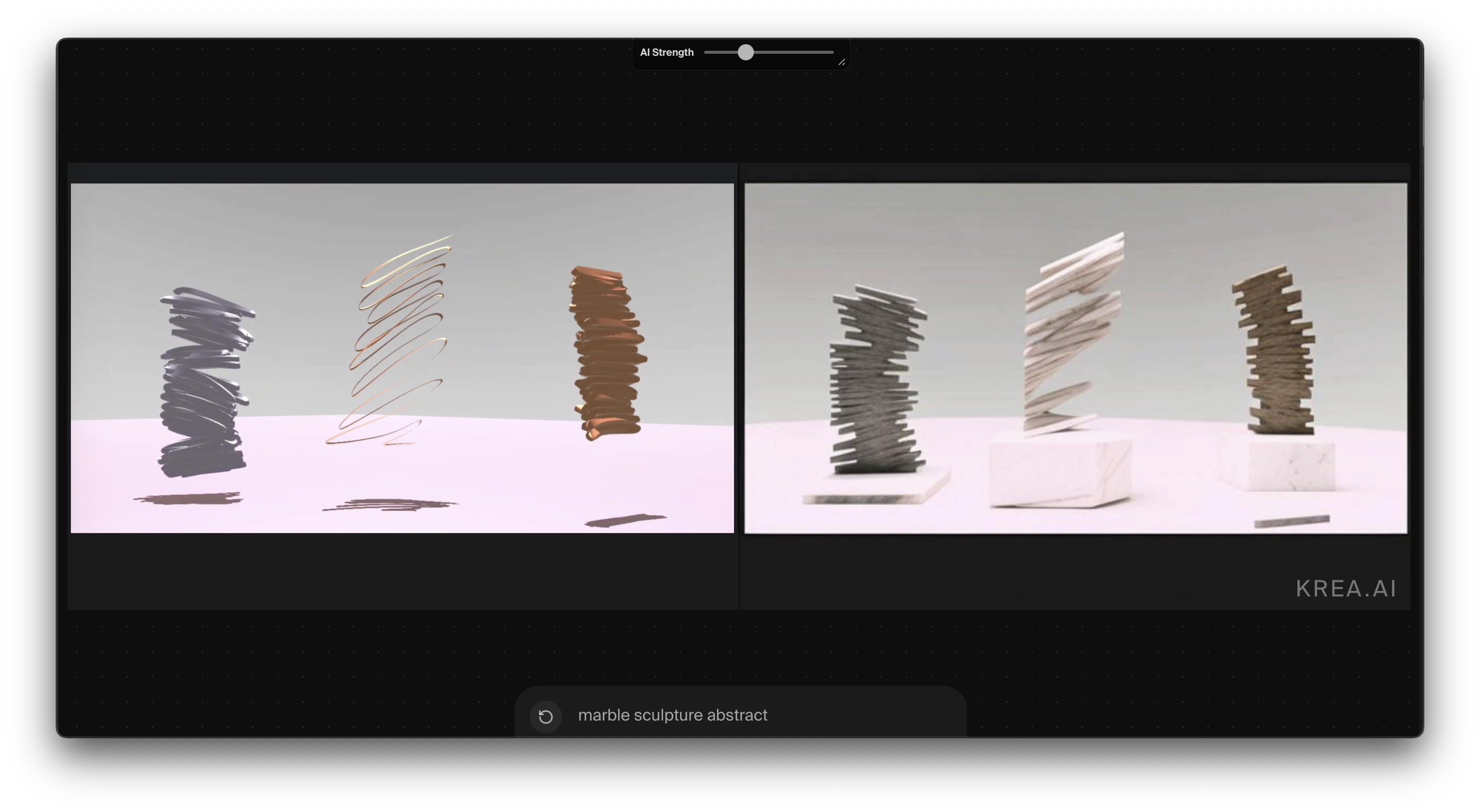

In Ideation, the designer sketches spatial ideas in VR at full scale using body and hand gestures, which are live-streamed into the AI image generator Krea to rapidly iterate variations. The designer sees these AI images real-time and chooses which ideas to feedback loop into further developing the design.

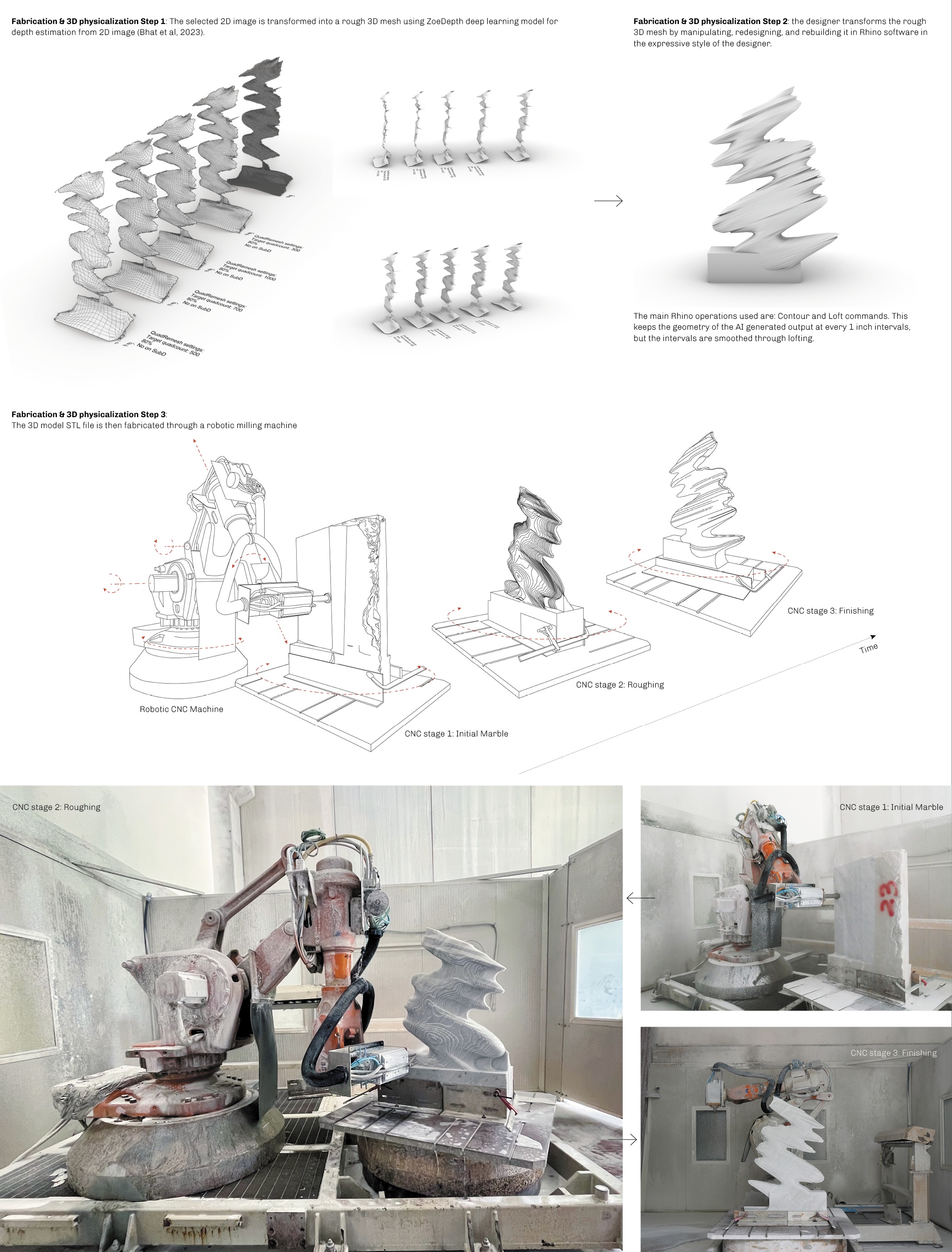

In Fabrication, the designer transforms the resulting image into a rough 3D mesh using ZoeDepth, and then manipulates, redesigns, and rebuilds it in Rhino in the expressive style of the designer. Finally, it is CNC-milled by a robotic arm and hand-finished by the designer.

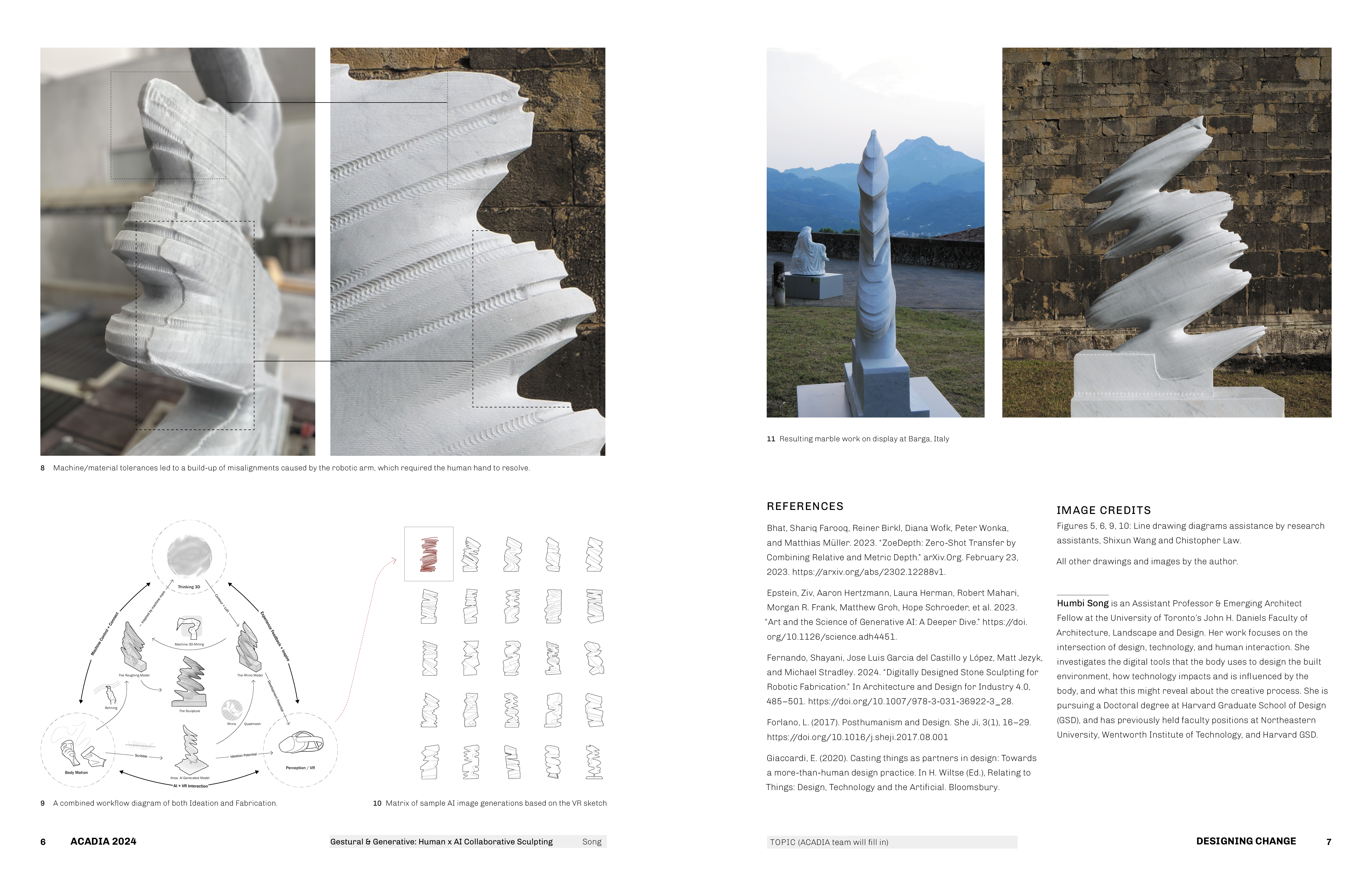

The resulting work retains the body-scale gesture of the designer’s original sketch but each inch-tall strip is altered by AI, Rhino operations, robotic toolmarks, and the hand. It reflects the tension between intuitive, imprecise human gestures and the precise, robotic fabrication process. This case study provides an example of what happens when AI ideation processes get out of the 2D screen and into the 3D physical world — the realm of our bodies, fabrication, design, and architecture.

See next: an example output of this process ︎ Entanglement (Sculpture)

In Ideation, the designer sketches spatial ideas in VR at full scale using body and hand gestures, which are live-streamed into the AI image generator Krea to rapidly iterate variations. The designer sees these AI images real-time and chooses which ideas to feedback loop into further developing the design.

In Fabrication, the designer transforms the resulting image into a rough 3D mesh using ZoeDepth, and then manipulates, redesigns, and rebuilds it in Rhino in the expressive style of the designer. Finally, it is CNC-milled by a robotic arm and hand-finished by the designer.

The resulting work retains the body-scale gesture of the designer’s original sketch but each inch-tall strip is altered by AI, Rhino operations, robotic toolmarks, and the hand. It reflects the tension between intuitive, imprecise human gestures and the precise, robotic fabrication process. This case study provides an example of what happens when AI ideation processes get out of the 2D screen and into the 3D physical world — the realm of our bodies, fabrication, design, and architecture.

See next: an example output of this process ︎ Entanglement (Sculpture)

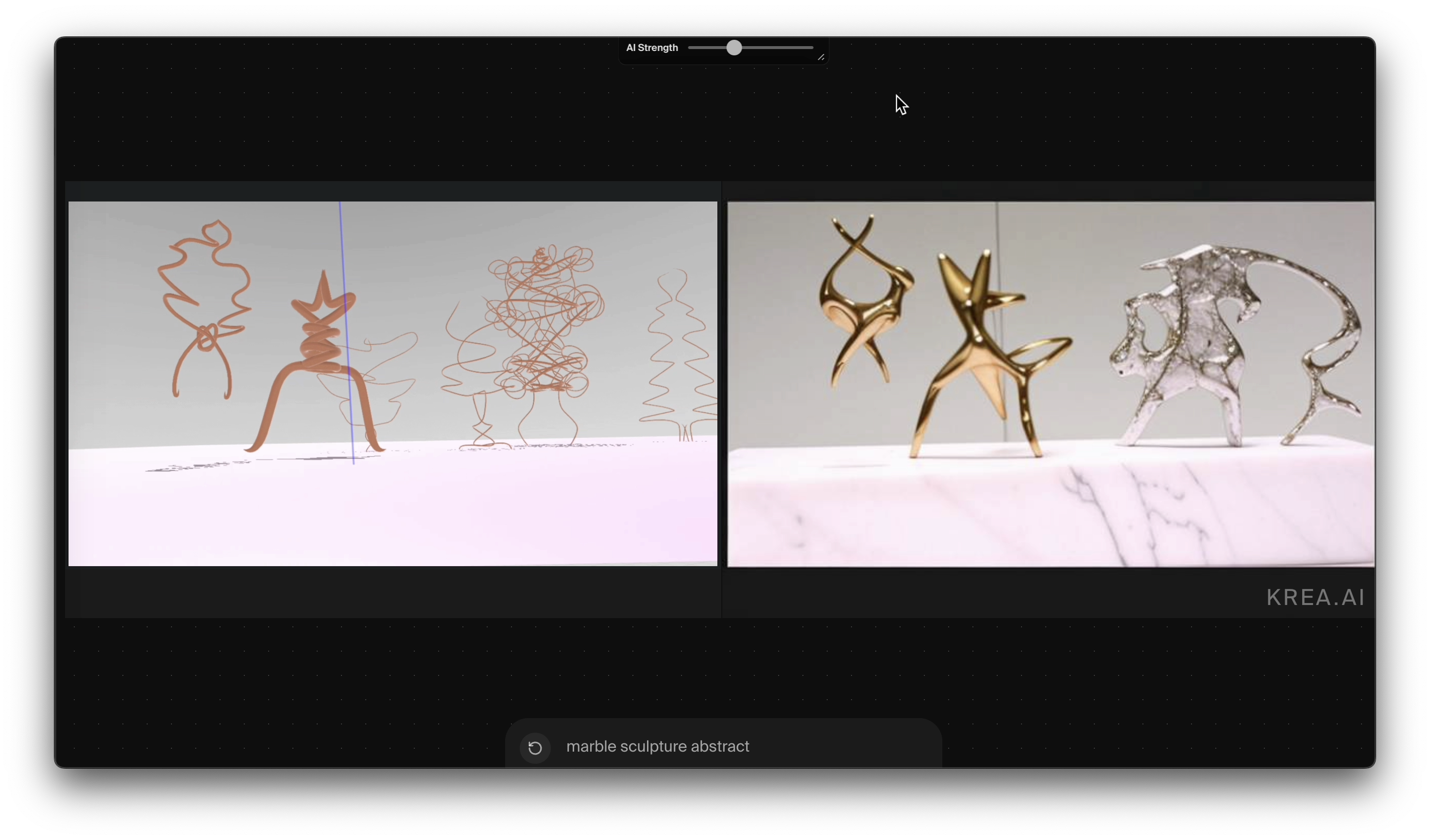

Left panel shows the designer’s view through VR; Right panel shows AI transformations real-time.

VR-AI ideation feedback loop:

Experiment 1 - jewelry:

Experiment 2 - marble sculpture form:

Experiment 3 - vertical marble sculpture form:

︎︎︎ Download: affiliated reseach publication at ACADIA 2024